Key Concepts

To understand how the system was redesigned to maintain uptime under heavy traffic, the following concepts are referenced throughout this case study:

- Distributed Cache: A caching approach where frequently requested data is stored across multiple locations. This reduces load on the backend and allows the system to serve high traffic efficiently.

- Cloudflare Workers: A serverless platform that runs application code at the network edge, close to users, which reduces latency and enables fast, consistent performance at a global scale.

- Mutex Lock: A coordination mechanism that ensures only one process performs a specific operation at a time. In this case, it was used to prevent multiple requests from triggering the same expensive backend operation simultaneously.

Business Problem

The client's API was strictly tied to specific Windows-based software, making the move to more natively scalable infrastructure (such as Linux or full Serverless) impossible. As a result, the backend had to be hosted on a Windows VPS with limited capacity.

While the system worked under normal conditions, this technical constraint created a critical liability during traffic surges:

- Cache Stampede: When the cache expired, Cloudflare would send hundreds of simultaneous revalidation requests to the backend at once. Standard HTTP headers and caching configurations were ineffective at preventing this; the sheer volume of parallel requests triggered a "stampede" effect that immediately overwhelmed the Windows VPS, causing it to crash.

- Windows Dependency Constraint: Because the core software required a Windows environment, the standard fix (upgrading to a larger, more expensive VPS) only delayed the crash rather than solving the underlying logic flaw. It was an expensive "band-aid" for a structural problem.

- Reliability Risks: Unpredictable downtime during peak periods threatened the client's reputation and led to a loss of user trust during their most important traffic windows.

Together, these constraints made it impossible to guarantee uptime during high-traffic events and posed a serious risk to the platform's reliability.

Our Solution

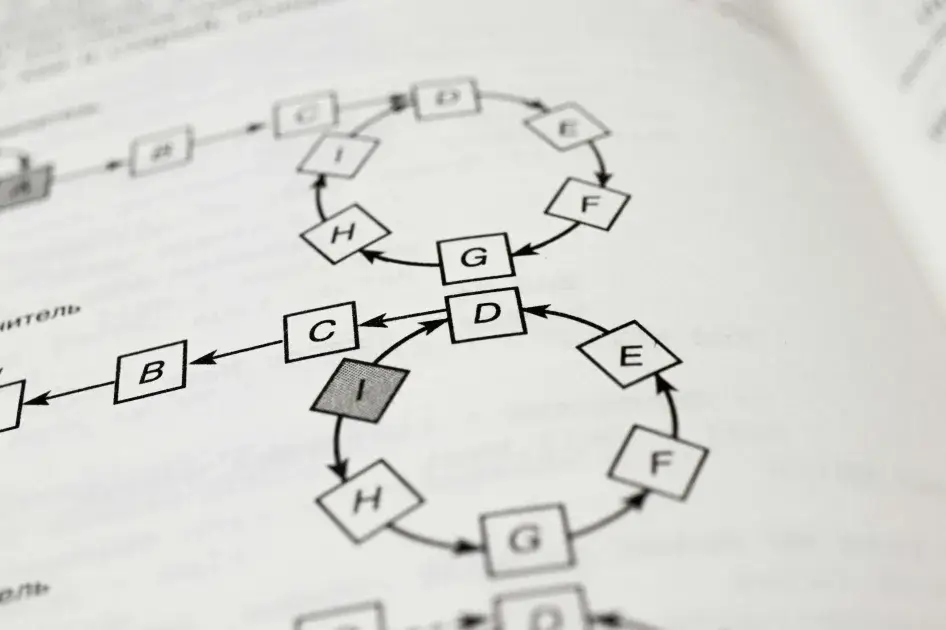

We developed a custom distributed cache algorithm within Cloudflare Workers to act as an intelligent shield. Instead of trying to replace the necessary Windows backend, we built a modern layer around it to protect it from traffic spikes.

- Coordinated Revalidation: We implemented a "Mutex Lock" system. When the cache expires, the algorithm allows only the first request to trigger a backend update. All other simultaneous requests are told to wait or use existing data, preventing the VPS from being "hammered" by a surge.

- Stale-While-Revalidate Strategy: During the brief window when the system is fetching new data from the Windows software, we configured the cache to serve "stale" (previous) data to users. This ensures the user sees a working site instantly, rather than a loading spinner or an error page.

- Backend Offloading: By shifting the coordination logic to the edge, we reduced the work the VPS had to do by over 90%, allowing the required Windows infrastructure to handle massive traffic volumes with ease.

This solution provided a scalable, cost-effective architecture that prioritized uptime and reliability for the client's operations.

Results

A resilient caching system designed to prioritise uptime under all traffic conditions.

- 100% Uptime: The backend remained stable under heavy load, eliminating crashes caused by simultaneous revalidation requests.

- Unlimited Read Scalability: Traffic was absorbed at the edge, with backend revalidation limited to a single coordinated request regardless of volume.

- Cost Savings: The client avoided repeated VPS upgrades, reducing infrastructure costs without sacrificing performance.

- User Experience Priority: By prioritising availability (serving stale data) over strict consistency, we ensured that users never encounter a "Service Unavailable" message, regardless of traffic volume.

The platform can now absorb traffic spikes confidently, ensuring availability without relying on costly backend scaling.